Every GTM system today has AI running somewhere in the background. It writes, cleans, summarizes, and fills data. That part has become normal.

But what still slows most teams down is how they use it.

A lot of stacks run heavy models for every small task. It looks fine on paper until you check your usage costs!

4o mini changed that.

It gives you the same level of clarity for short, repeatable work without the weight. That’s why it’s become the go-to model for outbound and GTM teams who build inside Clay.

Where it fits in your flow

If you look at your own setup, most of what happens daily is simple.

You’re enriching leads, cleaning data, summarizing profiles, fixing tone in short messages.

None of that needs deep reasoning. It just needs to run fast and stay consistent.

That’s where 4o mini works best. You can call it multiple times inside Clay without thinking about cost or delay. It runs through the small steps that keep your system alive.

When you start using it, you’ll feel the difference.

Pages load faster, automations finish in seconds, and your runs don’t stall midway. The motion feels lighter.

What teams are noticing

Many GTM builders have already switched from 3.5 or full 4o to the mini version for daily automations. The reason is always the same. It handles the everyday tasks just as well, but it costs less and runs smoother.

If you process a few hundred rows a day, the change might look small. If you process a few thousand, the savings are immediate.

You spend less time waiting, and your credits stretch longer.

The quality stays strong for short prompts, the kind of prompts you actually use in production.

You don’t need the model to be creative.

You need it to stay stable when you’re running multiple actions across lists.

Where you still need the heavier model

4o mini won’t replace everything. You’ll still need the larger model when you’re doing ICP analysis, campaign logic, or research that needs longer memory.

And that’s fine.

Those are one-off tasks, not the heartbeat of your system.

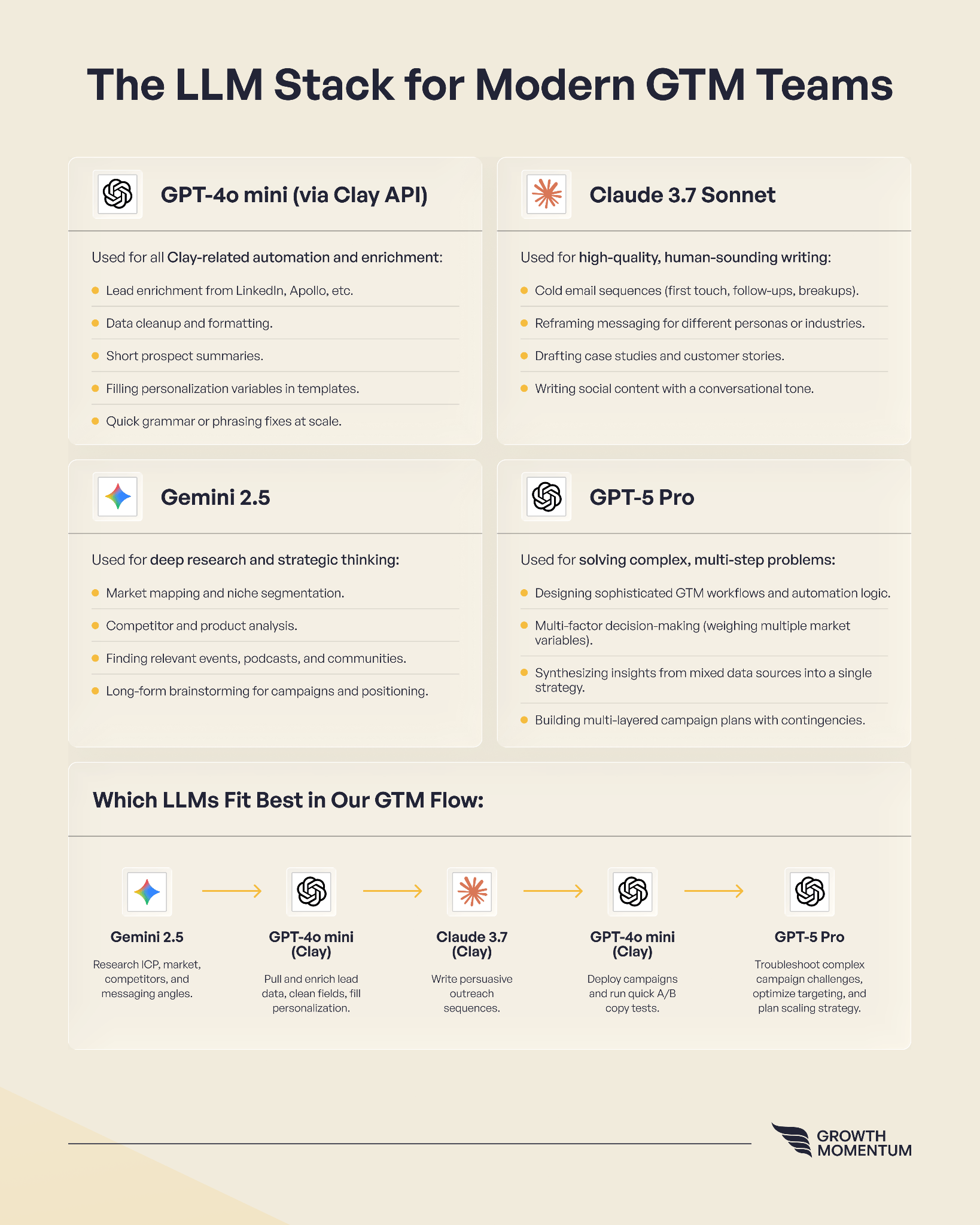

Our Current LLM Stack

For everything else, the mini model covers it. You can layer it into enrichment, cleanup, and short-form writing without losing context.

The trick is to keep the separation clear. Use the heavier model where insight matters.

Use mini everywhere else where consistency matters more.

The real shift

Most people used to chase the biggest model they could afford. That phase is over.

The focus now is on building systems that actually run clean at scale.

4o mini represents that shift.

It gives teams a way to use AI the way it was meant to be used in GTM. As part of the motion, not as a separate project. You stop worrying about cost per token, and you start thinking about flow per second.

That’s how outbound gets faster.

That’s how operations stay stable even when the volume goes up.

If you are still unsure how to put this together for your own motion, feel free to reach out. I am always happy to jump on a call and walk through your setup and strategy :)